We all know that organic traffic is volatile. However, it is still extremely frustrating to experience a drop in organic website traffic—especially when we are responsible for explaining this drop to clients or management. It can be easy to blame Google with yet another algorithm change, but we can also look at it as an opportunity to examine changes and issues with the site itself.

With so many potential reasons behind a traffic drop, where do you even start looking? Here, we give you six simple steps to help you diagnose and resolves a major drop in organic traffic to your site.

Checklist – Start Troubleshooting Here

1. Are there any changes in search demand, or are seasonality factors at play?

The first thing to do when experiencing a drop in organic traffic is to ask yourself ‘was the drop caused by lower search interest or seasonality factors?’

It is normal for organic traffic to fluctuate to a certain extent. Organic traffic largely depends on search demand and on your site’s keyword rankings. Search demand (or search volume) can fluctuate and keyword ranking positions tend to shift around a lot, so you are likely to see this reflected in your organic traffic.

Before doing a deeper dive into the severity of your traffic drop, check out these 3 simple potential causes first:

Check for changes in search demand

Search demand is reflected in the search volume of keywords. When search interest decreases for a query, then the average monthly search volume for that keyword also decreases. If that happens to a query or set of queries for which your site is ranking, then you will likely experience lower organic traffic numbers.

One great example of drastic changes in search demand is the global search interest in the query “GDPR.” Google Trends is a great tool for getting insights into search demand over time, both globally and on a country level. Looking at Google Trends, we see that the global interest in GDRP (General Data Protection Regulation) spiked from April to June in 2018, which was the time leading up to and directly after its implementation on May 25, 2018. Naturally, if your site was ranking well for the term GDPR, you will have experienced a sharp drop in organic traffic starting from the end of June 2018.

As you can see, tracking changes in search demand over time can help you explain traffic drops.

Monitor public holidays in your target markets

Depending on the industry you’re in, public holidays can leave a dent in your organic traffic. It is generally a good idea to track any public holidays not just for the country in which you are located, but also the countries from which most of your traffic originates.

To check where your site’s visitors are from in Google Analytics (GA), navigate to Audience > Geo > Location. Then outside of GA, track the public holidays in the countries where most of your visitors are from. Perhaps you will find that public holidays in your primary markets were the reason behind your traffic drop.

Be aware of trends in seasonality

Seasonality is another industry-dependent factor that can significantly affect your site’s organic traffic. It is normal for sites in certain industries to see organic website traffic go down during certain times of the year. For example, e-commerce flower sites are likely to see large drops in organic traffic after Mother’s Day and Valentine’s Day, but due to seasonality, this is no cause for immediate concern.

Again, Google Trends is a great tool for revealing seasonality trends for a specific query. If you’ve had GA set-up for your site for more than a few years, you can also use the comparison function in GA to see if previous years also saw a drop in organic traffic around the same time of year.

If changes in search demand, public holidays, or seasonality are not the cause of your organic traffic dip, then a traffic drop would qualify as significant only when there’s a pattern of decline that lasts for at least a week or two. While this is in no way set in stone, this timeframe is a suitable benchmark.

If your traffic drop appears to be connected to any of the factors listed above, then wait it out, bookmark this checklist, and keep an eye on your organic traffic before taking further action. If your traffic drop does not appear to be connected to any of the factors listed above, then let’s move on to check your analytics set-up.

2. Are there any issues with your analytics set-up?

Malfunctions with your site’s tracking code or analytics set-up can happen to even the best webmasters—and these are usually caused by honest mistakes.

Problems with data collection are especially common when you have different parties working on your site, such as agencies, freelancers, and other employees. Here’s how to check whether your analytics set-up is the cause of your organic traffic drop:

Check if your tracking code is working

First things first: Check if your tracking code is working. A working tracking code sends traffic data to your analytics account. Certain changes to your website, such as customizations to the tracking code or the installation of certain plug-ins or scripts, can cause your tracking code to disappear or to stop working. This leads to missing traffic data.

To verify if your Google Analytics tracking code is working, open GA, and navigate to Admin > Tracking Info > Tracking Code. Here you’ll be able to see the status of your tracking code and any real time users of the site.

If the tracking code was marked as not receiving any traffic, then this will be the reason why your site’s traffic has dropped. Google has some great resources that describe common tracking set-up mistakes and how to solve them.

Check if every page contains the right tracking code

Even if your tracking code is working correctly, you can still be missing out on traffic data if the code isn’t implemented on all the pages of your site. Traffic to pages without a tracking code will not be recorded. Missing tracking codes are especially common among sites that have manual implementation of the code. Manual implementation is prone to human error, such as forgetting to put the tracking code on a new page.

To prevent these types of mistakes and to simplify the managing of tags on your site overall, it is a good idea to use Google Tag Manager. This tool can help you implement all kinds of codes, including tracking codes, on every page on your site simply by setting up one single tag.

In some cases, you may have multiple properties in Google Analytics, each with its own unique tracking code. In this scenario, it’s possible to accidentally mix up the tracking codes and implement the wrong code on your pages, leading to the traffic being attributed to a different reporting view in GA.

To verify if all your pages contain a tracking code, you can use crawling tools, such as Screaming Frog. Screaming Frog can crawl every single page on your site to check for the presence of a tracking code. When doing this, you can also check if the correct tracking code was implemented on all your pages.

Verify whether any changes have been made to your Google Analytics settings

There are plenty of settings within Google Analytics that can be tinkered with and that could potentially lower reported organic traffic. Changing up certain settings, such as channel grouping and filters, can result in organic traffic being filtered out or attributed to a channel other than organic.

Check with anyone who has admin access to your Google Analytics if they have recently altered any settings in GA to verify if this could be the reason behind your organic traffic drop.

If there are no problems with your tracking code implementation or analytics set-up, then it’s time to see if there are any technical problems with your site.

3. Has your site experienced any technical problems?

While there are more technical problems that can affect traffic than we can cover in this article, the following technical issues are the most common culprits behind sharp drops in organic traffic:

Indexation Issues

Having your pages removed from Google’s index can result in sharp drops in organic traffic, as your pages will no longer be able to show up in the search results. These are the 3 main causes of deindexation:

Accidental Implementation of the Noindex Directive

It is not uncommon for noindex tags or directives to be accidentally implemented on (the wrong) pages. The noindex directive is placed in the <head> section of the HTML code, and it is used to tell search engines that you do not want the page to show in search results.

The tag can prevent new pages from being indexed and can also be used to remove previously indexed pages from the index, as long as crawlers are not blocked from accessing a page using robots.txt.

Other than doing manual checks, a quick site crawl, using a crawling tool like Screaming Frog, can tell you exactly which pages contain a noindex tag. This is what a noindex tag looks like:

<meta name=”robots” content=”noindex”>

Accidental Blocking of Crawlers using Robots.txt

Robots.txt, or the robots exclusion protocol, is a file hosted on the root domain that can instruct robots (typically search engine crawlers) on how to crawl your site. It is most frequently used to disallow the crawling of certain pages, file types, or site sections.

If a search engine can’t crawl your pages, then it also can’t index them. Hence, pages can be accidentally blocked from indexation by making a simple mistake in your robots.txt file. For example, one single line can prevent your entire site from showing up on the index:

User-agent: *

Disallow: /

The above text means that none of the different crawlers out there (user-agent: *) are allowed to crawl any of the URLs on your site (Disallow: /).

As you can see, seemingly small mistakes in the robots.txt can have huge consequences.

Google Search Console (GSC) offers a robots.txt tester that can help you check for errors. This tool also allows you to check specific URLs to see if the page is blocked from access by Googlebot.

Unprepared for Mobile-First Indexing

Google rolled out mobile-first indexing in 2018, and many sites have already been switched to mobile-first. Mobile-first indexing means that Google will index the mobile version of your pages instead of the desktop version. If your site is responsive, then this will not be an issue as both versions of the page should contain the same content.

However, if your mobile URLs are different from your desktop URLs, or if your site is not mobile-friendly, you could face negative consequences when your site is switched to mobile-first by Google. You will be notified with a message in GSC when this switch is made. In the worst-case scenario, your pages won’t be indexed at all.

To prevent this, ensure that your site is mobile-friendly. The safest way to ensure this is to make your site is responsive. In the meantime, you can use Google’s mobile-friendly test tool or the mobile-usability report in GSC to check if your site is ready for mobile-first indexation.

Crawl Errors

Broken pages, server errors, redirect errors—these issues can lead to crawl errors. In most cases, URLs with crawl errors can’t and won’t be indexed.

Again, GSC is your best friend, as it offers an index coverage report that will highlight any crawl errors.

Manual Actions

Manual actions are penalties issued by Google employees that can result in your pages or even your entire site being removed from Google’s index. You will receive a notification in your GSC account when a manual action is imposed on your site. You can also check the “Manual Actions” section for any warnings.

Manual actions can be imposed for different reasons, for example when there are pages on your site that are not compliant with Google’s guidelines or when your site has been found to engage in unnatural link building schemes.

When notified about a manual action, you will also receive suggestions and guidelines on how to fix the problem that you were penalized for.

Site Down Time

Last but not least, simply having your site down or offline for a few hours or days can leave a huge dent in your traffic numbers. Forgetting to renew your domain license, having issues with your servers, or security breaches can all lead to site down time.

Having a great technical support or developer team means that you will be notified right away of any site down time. You can also leverage many free online tools, such as DownNotifier, to notify you when your site is down.

The above technical issues are all factors that can lead to drops in organic traffic. If there were no technical problems found with your site, then it’s time to take a look at your keyword ranking performance.

4. Are there any major keyword ranking drops or losses?

We all know that the more ranking keywords you have and the higher their position, the more organic traffic you’ll see. This is exactly what makes keyword rankings so coveted within the industry. Vice versa, a decrease in ranking position or lost rankings for your core keywords can lead to a drop in organic traffic.

Here’s what you can do to check if changes in keyword rankings were the cause behind your site’s traffic drop:

Track your keyword rankings

It is generally best practice to track your site’s keyword ranking performance to get an idea of how you are performing over time and to get a sense of when to sound the alarm.

As manual checking can be a tedious and time-consuming task, it is best to automate this process with a useful keyword rank tracking tool, like Dragon Metrics.

As a rule of thumb, it is a good idea to critically assess your keyword ranking performance once a month. Keyword ranking positions can be extremely volatile due to algorithm changes and constant changes in your competitors and their strategies. Because of this volatility, it is best not to be fixated on ranking performance over a short time, such as days or even a few weeks. Generally speaking, we do not recommend taking action until you see a pattern of decline in rankings that lasts for at least a month.

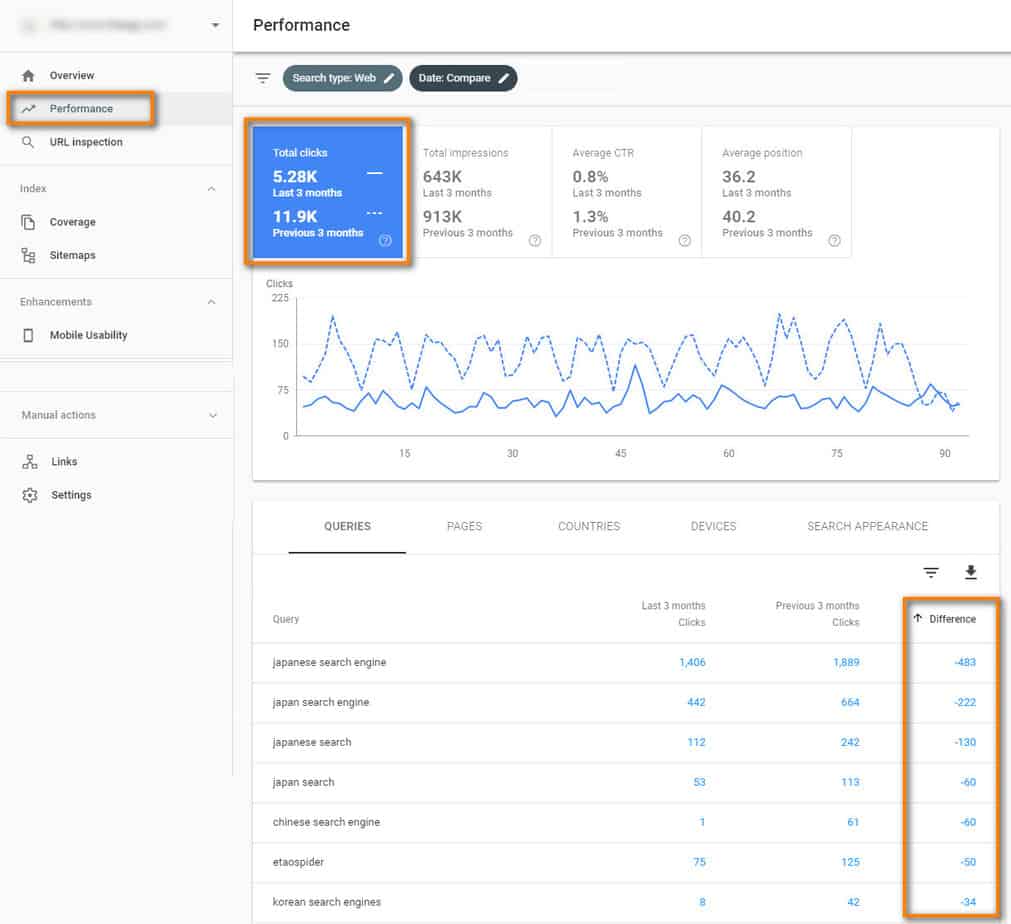

Monitor which keywords drive fewer clicks to your site in Google Search Console

GSC offers an extremely helpful feature that shows you which keywords drive the most (and the least) traffic to your site. This is useful to pinpoint which exact keywords were responsible for a drop in organic traffic during a specific time.

We recommend using the comparison feature in GSC’s performance report to identify changes in clicks for specific keywords over the time in which your site experienced a drop. In doing this, you can go as granular as looking at keyword performance for a specific URL or even filtering out specific keywords in your research (such as branded keywords).

After looking at the performance report and identifying which keywords contributed to a drop in organic traffic, you can focus on strengthening your ranking position and your site’s authority around these keywords. This is necessary to regain the organic traffic that this keyword was previously bringing.

In the scenario seen in the screenshot above, we should focus on strengthening our site’s keyword ranking performance for the query “Japanese search engine,” as this keyword saw 483 fewer clicks over 3 months.

To do this, we should focus on on-page optimizations for this keyword and supplement our pages with more in-depth information around the keyword’s theme. It is also a good idea to see who is currently outranking you for this keyword, to learn what they’re doing that you aren’t.

As a final pro tip, we recommend downloading GSC’s performance report data every 3 months. Many are unaware of the fact that performance data will be removed from GSC after 3 months. Downloading historical data from GSC can help you troubleshoot traffic drops or changes in keyword performance that happened in the past.

Verify whether any changes were made to your site’s content

Some changes that you make to the content on your site, often in an attempt to change things for the better, can result in a loss of organic traffic.

This is commonly seen when you’re working with different teams that focus on the visual elements or brand messaging of a site, such as a UX team, creative agency, or design team.

Removing or cutting down on content, the rephrasing of meta titles and header tags, and the replacing of content with images or videos—these things can lead to a loss in organic traffic. If your text content was the main reason why your pages were ranking, then cutting down on that will have a negative impact on your rankings. This will ultimately be reflected in your organic traffic numbers.

If you’re working with any of the above teams, then do a quick check with them to see what kind of changes they’ve made to your pages. If content cutting or the rephrasing of content was the culprit behind your loss in rankings and traffic, then it’s a good idea to rethink how you can best combine SEO and UX on your site.

If your site did not experience losses on keyword ranking, then it’s time for us to examine changes to the SERP.

5. Has the SERP landscape changed?

Looking at the search engine results page (SERP) landscape can help explain why your site experienced a drop in organic traffic. There are several changes to the SERP that can impact your site’s performance:

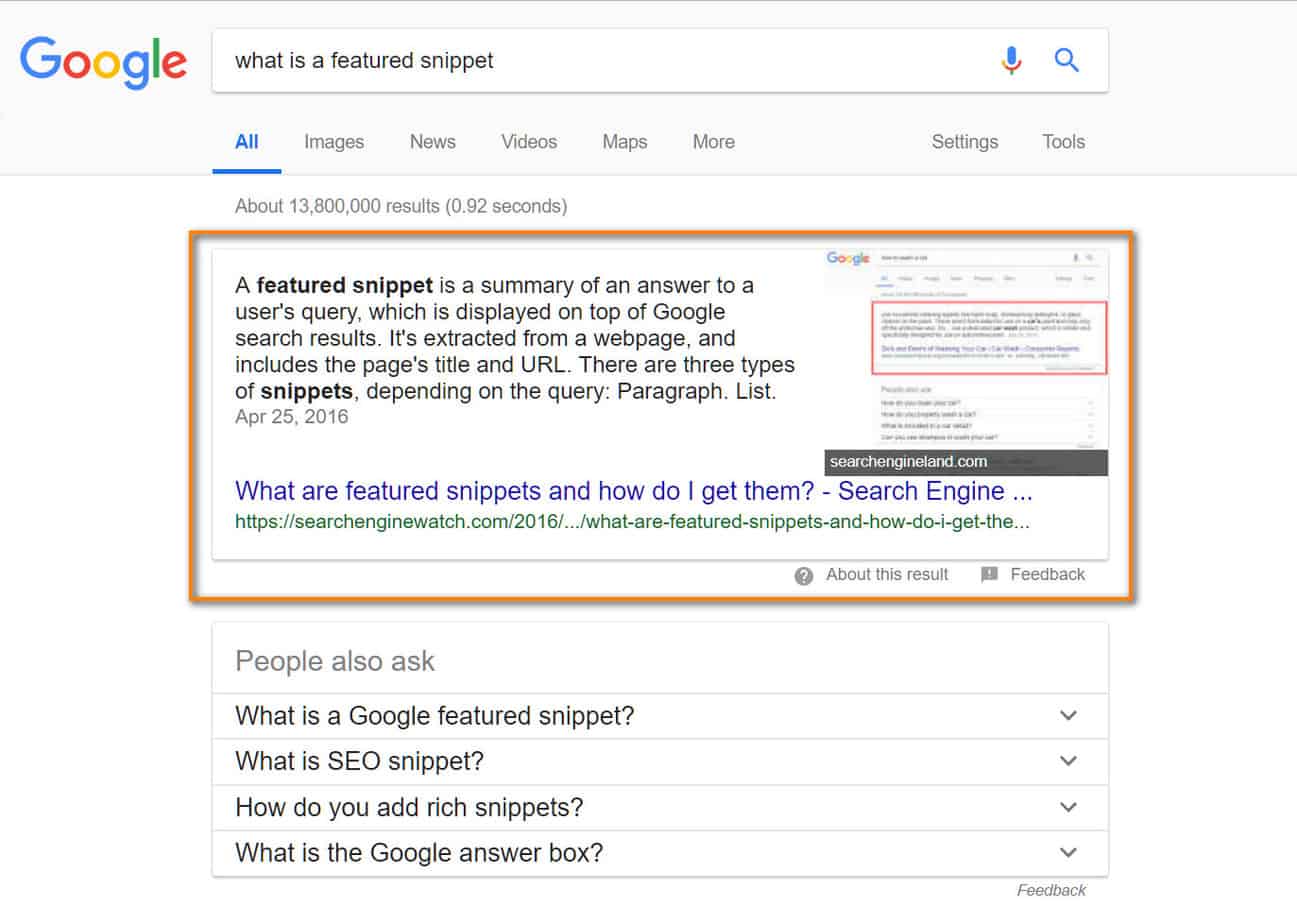

Appearance of a Featured Snippet

When Google introduces a new featured snippet for a query or set of queries that your site was ranking well for, you are very likely to see a drop in organic traffic. This can even be the case when your site’s search result is the one that received the featured snippet.

Featured snippets generally reduce the click-through rate, or CTR, for search results, as they display the answer to the query on the SERP, reducing the need to click. The image below shows a featured snippet for the query “what is a featured snippet?”.

The above example shows how the owner of a site featured in a snippet might experience an organic traffic drop, as the snippet itself already provides a solid answer to the question.

While we do not have full control on what Google decides to show in the featured snippet, we can try to influence the existing snippet by optimizing our page for a featured snippet. In doing so, you can make an effort to have the content in the snippet truncated, for example by applying a list or paragraph format to the content on your page. Usually, full lists or paragraphs are too long to fully show in a snippet, so the content will get truncated, meaning the user will have to click on the snippet to view the source page for the complete information.

If you really do not want your site to be used in a featured snippet, then Google provides a way to opt out of this. Adding the below tag to your page will result in the removal of the featured snippet. However, keep in mind that the removal of the featured snippet to your site’s search result can lead to a different site being featured instead.

<meta name=”googlebot” content=”nosnippet”>

If another site’s featured snippet is negatively affecting the traffic to your site, there are a few things you can do.

- You can try and ‘steal’ the featured snippet by optimizing your content accordingly. Even if you’re not trying to obtain the featured snippet yourself, you should still optimize your pages for the query you want to rank for to get a better chance of showing on the first page. However, keep in mind to optimize your pages for snippets in a way that the featured snippet will tempt the user to click through to your site.

- You can also leverage paid search to get your site to show as high as possible on the SERP.

- Lastly, we can simply sit back, do nothing, and wait it out. Featured snippets are proven to be very volatile and may not permanently appear for a query.

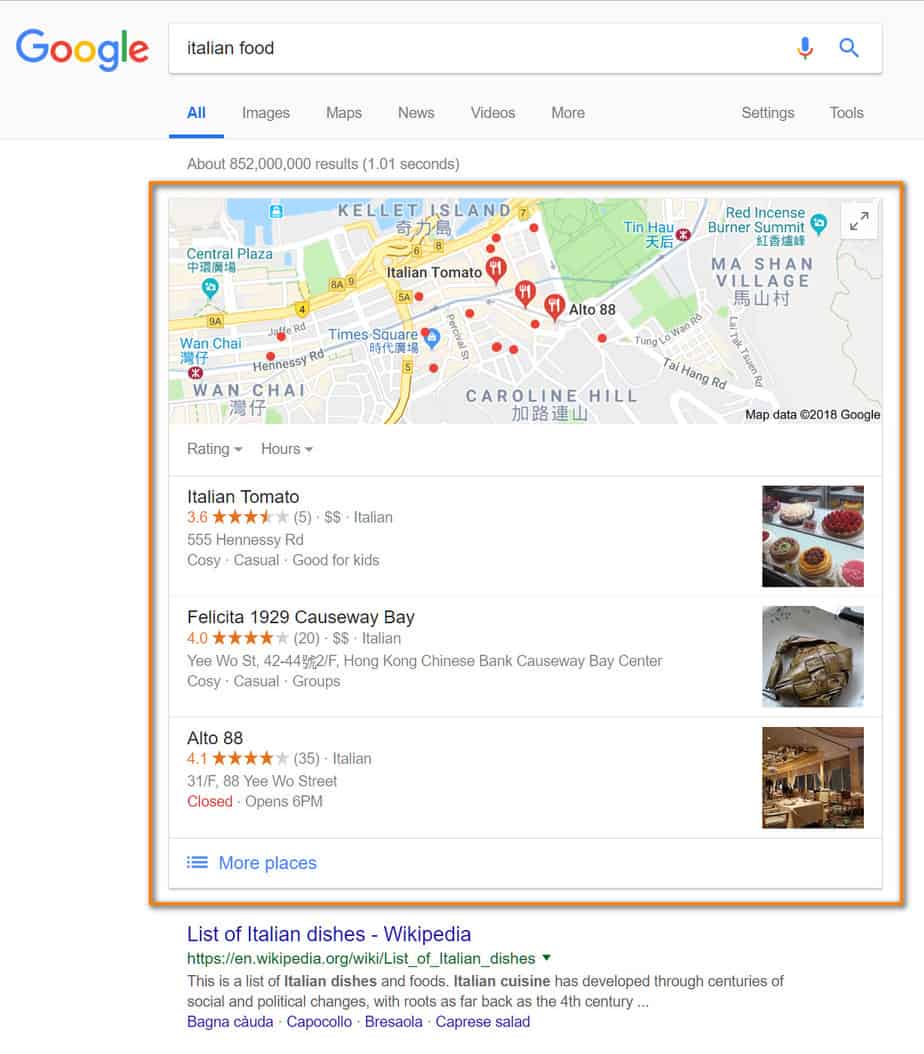

Not Featured in a Local Pack

With Google’s heavy emphasis on providing searchers with localized results, nowadays more and more queries return a local pack. A local pack shows up high on the SERP and is a series of 3 local business results shown under a map. The information displayed is derived from the company’s Google My Business (GMB) listing.

When you’re a physical business and your company is not featured in the local pack, you’re most likely losing out on a large portion of organic traffic. A local pack takes up a lot of real estate on the SERP and pushes down other organic search results. For example, as you can see in the screenshot below, the keyword ‘Italian food’ returns a local pack even before it shows information on Italian cuisine or Italian recipes.

If you are the owner of a local business, you should aim to appear in the local pack. You can do this by improving your local SEO, which usually starts with optimizing your Google My Business listing.

Start familiarising yourself with local search ranking factors first, as these are different from traditional SEO ranking factors. You can then tweak your local strategy to optimize for these local ranking factors.

A New Competitor or Improved Competitor Performance

Even when you’re doing everything right, you can still lose traffic and rankings to your competitors. Your competitors could simply be doing a better job than you are in terms of SEO and content marketing.

New competitors could enter your market at any moment, and existing competitors can change up their digital strategy at any time as well. This is why it is a good idea to keep an eye on your competitors and monitor their digital marketing activities. Things to look out for include (but are not limited to):

- How much content do they have on the topic, and how good are they at answering the query?

- How fast does their site load?

- How many backlinks do they have?

- How is the user experience of their site?

When the performance of your competition is the reason behind your website’s drop in rankings and organic traffic, you should understand what they are doing differently from you or what they’re doing that you’re not. You can then identify any gaps in your strategy and learn from some of their tactics. Depending on what you find, this could mean different things, from allocating more resources to outreach and link building to revamping your content strategy.

Major Google Algorithm Changes

While we can’t always blame a change in Google’s ranking algorithms for a drop in organic traffic, we can’t completely rule it out either. A change in algorithm can lead to immediate lower keyword rankings and lower organic traffic. Sometimes the negative impact is short term, as Google needs some time to readjust your site’s new position in the SERP, and sometimes the drop in traffic lasts.

You can check for major algorithm updates on sites that keep track of such changes, such as Moz. Moz tracks major algorithm changes and lists the implementation date as well as its known effects. This can help you verify whether an algorithm change occurred during the period in which you experience an organic traffic drop.

6. Has your site lost any valuable links?

The last item on our checklist is to examine off-page factors. Backlinks are the most important off-page ranking factor in SEO, so our analysis will focus on incoming links to your site.

Check whether your site lost any valuable backlinks

Losing valuable backlinks can have a huge impact on your site’s performance in search. Google sees backlinks as votes of confidence for your site, and hence sites with a large amount of quality backlinks have a better probability to rank for competitive keywords than sites with fewer backlinks. Even the removal of 1 high quality backlink can lead to an immediate drop in rankings and organic traffic.

You can monitor backlinks using tools like Ahrefs or Moz’s Link Explorer. These tools will show you which backlinks were lost and the date that the link was removed. This can help you verify whether the loss of a backlink was the cause of your website traffic drop. If this was the cause, then you can try and follow up with the referring domain that dropped the backlink and see if there was any particular reason why the link was removed. Contacting the referring domain can give you a chance to regain the backlink.

See if there are any backlinks to broken pages

Sometimes backlinks are lost not because the referring domain removed them, but because the destination URL is broken. Again, link tracking tools can help you identify this type of lost backlink. You can then take action to regain the backlink. In most cases, this includes permanently redirecting (301) the URL to the new or replacement URL. This will result in the ‘link juice’ flowing back to your site.

We hope the framework in this article has helped you understand why your site experienced a drop in organic traffic. While experiencing a traffic drop is frustrating, it is good to know that it’s a common problem that happens to even the best webmasters. Following these 6 steps will help you relieve that frustration by narrowing down the problem area at the very least, if not identify the exact cause.