To ensure robust, high-quality mobile search results and protect the user experience, Baidu Search will upgrade to Hurricane Algorithm 3.0 in August 2019.

This upgrade is primarily to combat cross-domain acquisition and group site issues, and it will cover PC, H5, and mini sites. Baidu will limit search results as appropriate by the severity of any violations it discovers.

Here, we discuss the details of Baidu’s Hurricane Algorithm to help you better understand how to optimize for it.

Hurricane Algorithm History

Before this latest update, Baidu launched two initial versions of the Hurricane Algorithm:

- Hurricane Algorithm 1.0 launched in July 2017, cracking down on sites with poor content collection practices to give greater visibility to original, high-quality content.

- Hurricane Algorithm 2.0 launched in September 2018, targeting websites with plagiarized and duplicate content.

Hurricane Algorithm 3.0

In August 2019, Baidu Search upgraded to Hurricane Algorithm 3.0 to ensure robust, high-quality mobile search results and combat cross-domain acquisition and group site issues.

Cross-Domain Acquisition

Some websites and mini sites publish irrelevant content to get more traffic. Usually the content is collected from other random sites and is of low quality and therefore value to searchers. Baidu Search penalizes sites that lack focus on their field and limits their visibility on SERPs.

Cross-domain acquisition mainly includes two types of violations:

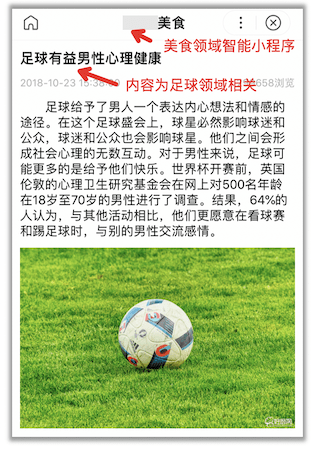

- The site publishes misleading content, including titles, keywords, and/or descriptions, that clearly indicates a specific industry or topic for the site. However, that content is not relevant to the true purpose of the site—for example, a food-based site that releases football-related content.

- The site has no clear field or industry or the content covers multiple fields or industries, with vague and/or low relevance to the true purpose of the site.

Group Site Issues

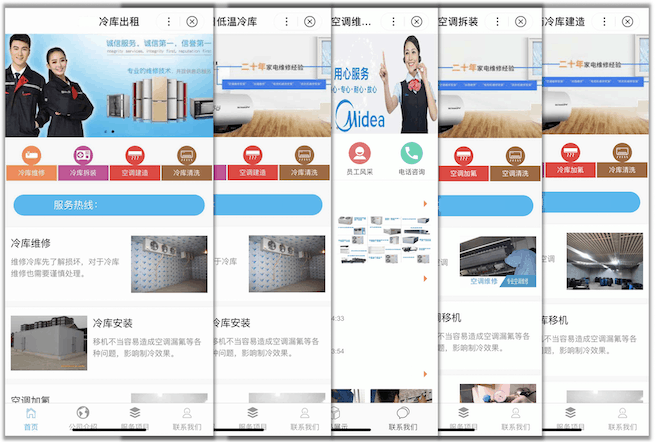

Some people construct multiple sites or mini sites in batches to attract search traffic. However, most of these site groups are low quality and have high content similarity. Some even reuse the same template. This makes it difficult for searchers to find the relevant information they’re looking for.

Hurricane Algorithm 3.0 is expected to launch in August 2019. Baidu Search reminds all webmasters to review their own sites and actively rectify issues to avoid any traffic losses.

How do we avoid being punished by Hurricane Algorithm 3.0?

The power of content to improve a website’s ranking performance is without question. Strong website content is characterized by clear, highly correlated, and concise information that contains keywords relevant to the page and user need.

As such, it is imperative to provide high-quality content with strong readability and a good user experience. And remember not to just collect unrelated content from other sites or social accounts. Your content must be relevant to your industry and search need to avoid penalties.